AirObject: A Temporally Evolving Graph Embedding for Object Identification

Object encoding and identification are vital for robotic tasks such as autonomous exploration, semantic scene understanding, and re-localization. Previous approaches have attempted to either track objects or generate descriptors for object identification. However, such systems are limited to a “fixed” object representation from a single viewpoint and are not robust to severe occlusion, viewpoint shift, perceptual aliasing, or scale transform. These single frame representations tend to lead to false correspondences amongst perceptually-aliased objects, especially when severely occluded. Hence, we propose one of the first temporal object encoding methods, AirObject, that aggregates the temporally “evolving” object structure as the camera or object moves. The AirObject descriptors, which accumulate knowledge across multiple evolving representations of the objects, are robust to severe occlusion, viewpoint changes, deformation, perceptual aliasing, and the scale transform.

Topological Object Representations

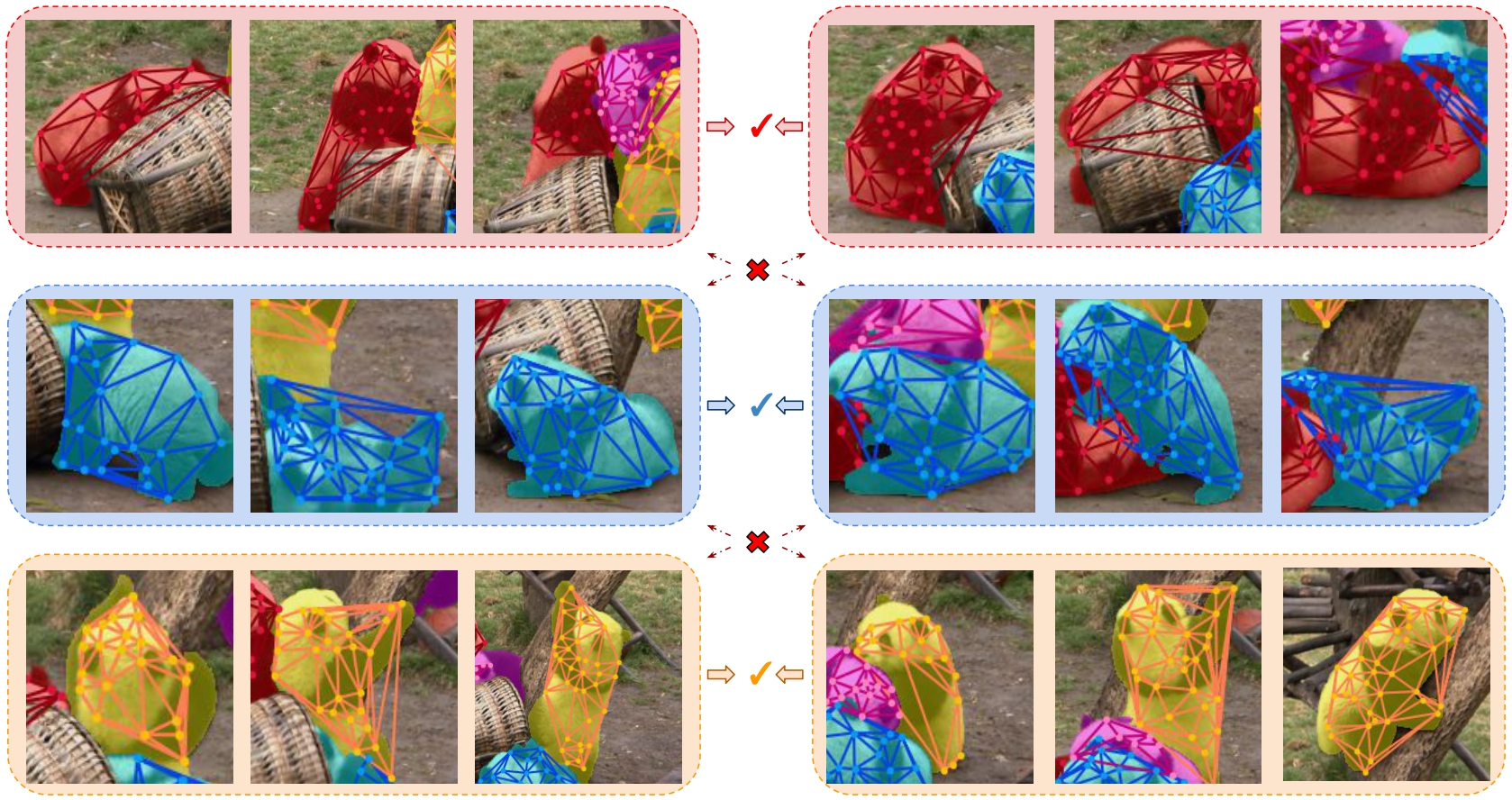

Intuitively, a group of feature points on an object form a graphical representation where the feature points are nodes and their associated descriptors are the node features. Essentially, the graph’s nodes are the distinctive local features of the object, while the edges/structure of the graph represents the global structure of the object. Hence, to learn the geometric relationship of the feature points, we construct topological object graphs for each frame using Delaunay triangulation. The obtained triangular mesh representation enables the graph attention encoder to reason better about the object’s structure, thereby making the final temporal object descriptor robust to deformation or occlusion.

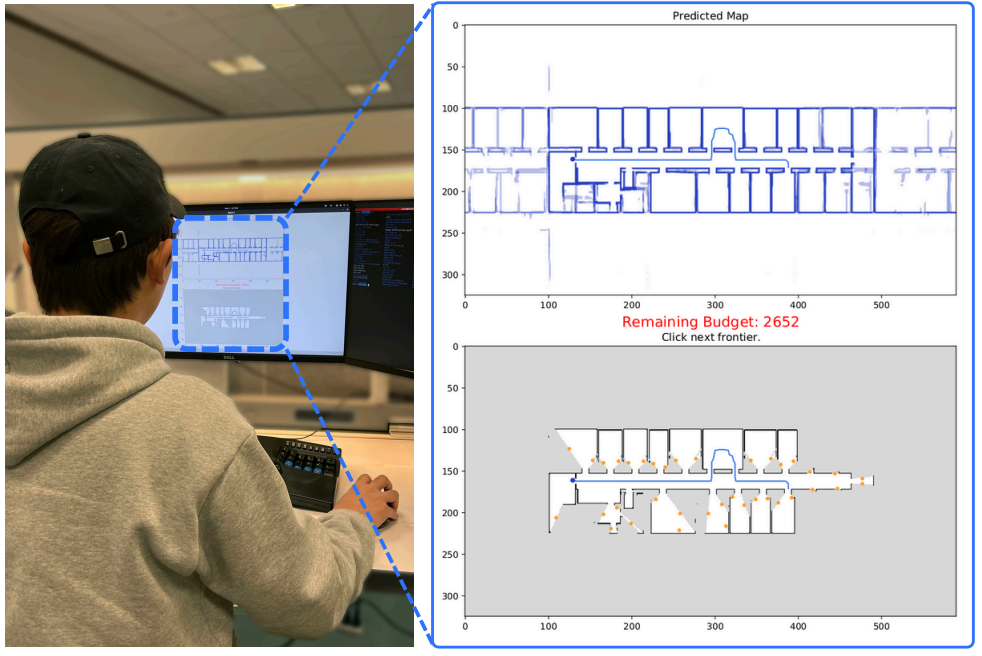

Simple & Effective Temporal Object Encoding Method

Our proposed method is very simple and only contains three modules. Specifically, we use extracted deep learned keypoint features across multiple frames to form sequences of object-wise topological graph neural networks (GNNs), which on embedding generate temporal object descriptors. We employ a graph attention-based sparse encoding method on these topological GNNs to generate content graph features and location graph features representing the structural information of the object. Then, these graph features are aggregated across multiple frames using a single-layer temporal convolutional network to generate a temporal object descriptor. These generated object descriptors are robust to severe occlusion, perceptual aliasing, viewpoint shift, deformation, and scale transform, outperforming the state-of-the-art single-frame and sequential descriptors.

Video

Publication

@inproceedings{keetha2022airobject,

title = {AirObject: A Temporally Evolving Graph Embedding for Object Identification},

author = {Keetha, Nikhil Varma and Wang, Chen and Qiu, Yuheng and Xu, Kuan and Scherer, Sebastian},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2022},

url = {https://arxiv.org/abs/2111.15150}}

Please refer to our Paper and Code for details.

Contact

- Nikhil Varma Keetha <keethanikhil [at] gmail [dot] com>

- Chen Wang <chenwang [at] dr.com>

- Sebastian Scherer <basti [at] cmu [dot] edu>