TartanAir: A Dataset to Push the Limits of Visual SLAM

Abstract

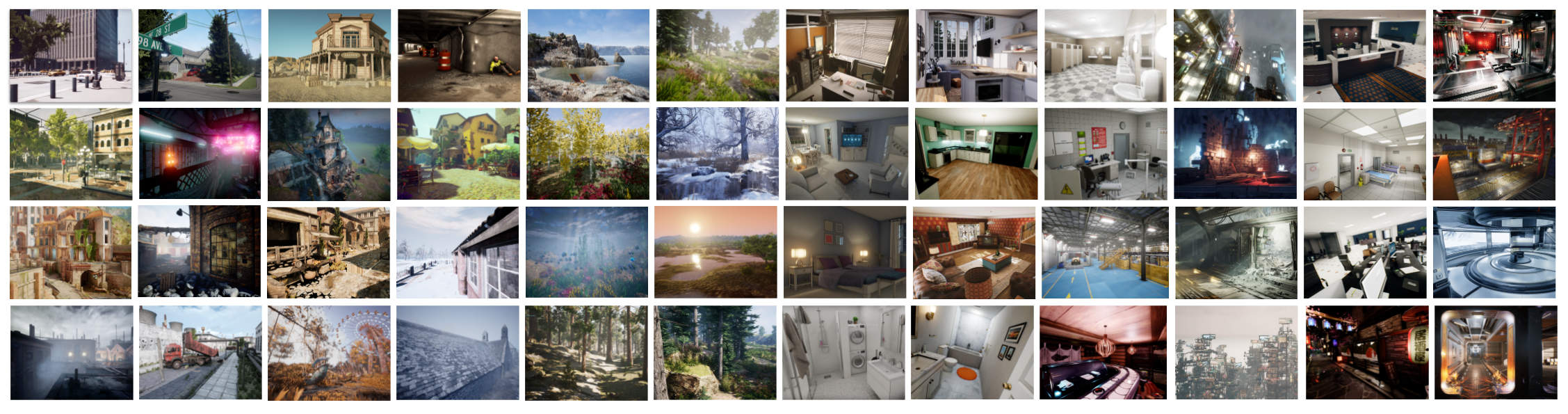

We present a challenging dataset, the TartanAir, for robot navigation task and more. The data is collected in photo-realistic simulation environments in the presence of various light conditions, weather and moving objects. By collecting data in simulation, we are able to obtain multi-modal sensor data and precise ground truth labels, including the stereo RGB image, depth image, segmentation, optical flow, camera poses, and LiDAR point cloud. We set up a large number of environments with various styles and scenes, covering challenging viewpoints and diverse motion patterns, which are difficult to achieve by using physical data collection platforms. In order to enable data collection in such large scale, we develop an automatic pipeline, including mapping, trajectory sampling, data processing, and data verification. We evaluate the impact of various factors on visual SLAM algorithms using our data. Results of state-of-the-art algorithms reveal that the visual SLAM problem is far from solved, methods that show good performance on established datasets such as KITTI don’t perform well in more difficult scenarios. Although we use the simulation, our goal is to push the limits of Visual SLAM algorithms in the real world by providing a challenging benchmark for testing new methods, as well as large diverse training data for learning-based methods.

Citation

Please read our paper for details.

@article{tartanair2020iros,

title = {TartanAir: A Dataset to Push the Limits of Visual SLAM},

author = {Wang, Wenshan and Zhu, Delong and Wang, Xiangwei and Hu, Yaoyu and Qiu, Yuheng and Wang, Chen and Hu, Yafei and Kapoor, Ashish and Scherer, Sebastian},

booktitle = {2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

year = {2020}

}

Download

The dataset is published using Azure Open Dataset platform. Please checkout here for the instruction of accessing the data.

Sample trajectories can be downloaded here.

abandonedfactory |

abandonedfactory_night |

amusement |

carwelding |

endofworld |

gascola |

hospital |

japanesealley |

neighborhood |

ocean |

office |

seasidetown |

seasonsforest |

seasonsforest_winter |

soulcity |

westerndesert |

The mission

Simultaneous Localization and Mapping (SLAM) is one of the most fundamental capabilities necessary for robots. Due to the ubiquitous availability of images, Visual SLAM (V-SLAM) has become an important component of many autonomous systems. Impressive progress has been made with both geometric-based methods and learning-based methods. However, developing robust and reliable SLAM methods for real-world applications is still a challenging problem. Real-life environments are full of difficult cases such as light changes or lack of illumination, dynamic objects, and texture-less scenes. Current popular benchmarks such as KITTI, TUM RGB-D SLAM datasets, and EuRoC MAV cover relatively limited scenarios and motion patterns compared to real-world cases.

We collect a large dataset using photo-realistic simulation environments. We minimize the sim2real gap by utilizing a large number of environments with various styles and diverse scenes. A special goal of our dataset is to focus on the challenging environments with changing light conditions, adverse weather, and dynamic objects. State-of-the-art SLAM algorithms are struggled in tracking the camera pose in our dataset and constantly getting lost on some challenging sequences. We propose a metric to evaluate the robustness of the algorithm. In addition, we develop an automatic data collection pipeline, which allows us to process more environments with minimum human intervention.

The four most important features of our dataset are:

- Large size diverse realistic data

- Multimodal ground truth labels

- Diversity of motion patterns

- Challenging Scenes

Dataset features

Simulated scenes

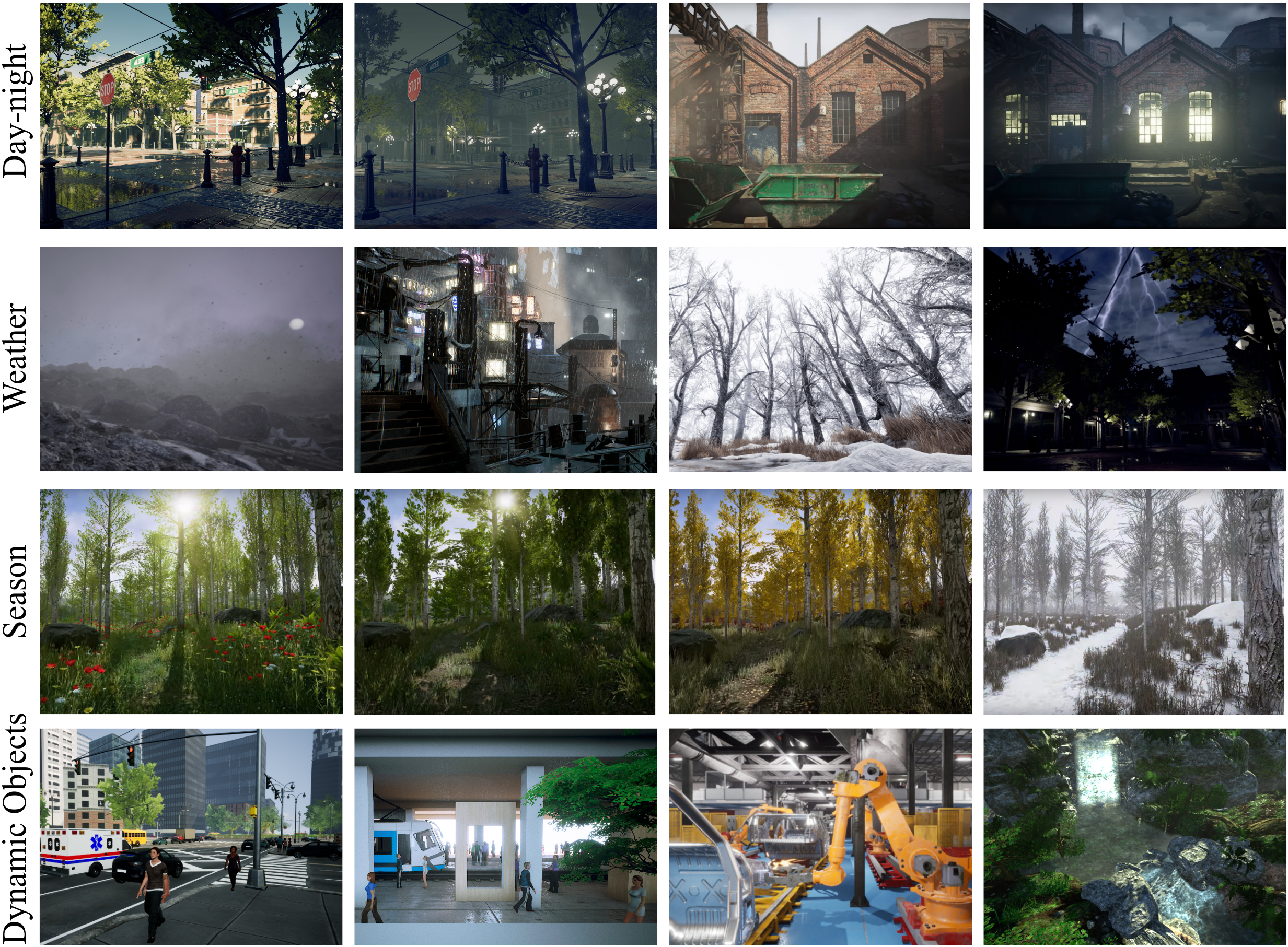

We have adopted 30 photo-realistic simulation environments in the Unreal Engine. The environments provide us a wide range of scenarios that cover many interesting yet challenging situations. The simulation scenes consist of

- Indoor and outdoor scenes with detailed 3D objects. We have multi-room, richly decorated indoor environments. For outdoor simulations, there are various kinds of buildings, trees, terrains, and landscapes.

- Special purpose facilities and ordinary household scenes.

- Rural and urban scenes.

- Real-life and sci-fi scenes.

Challenging visual effects

In some simulations, we simulated multiple types of challenging visual effects.

- Hard lighting conditions. Day-night alternating. Low-lighting. Rapidly changing illuminations.

- Weather effects. Clear, raining, snowing, windy, and fog.

- Seasonal change.

Diverse ego motions

In each simulated environment, we gather data by following multiple routes and making movements with different levels of aggressiveness. The virtual camera can move slowly and smoothly without sudden jittering actions. Or it can have intensive and violent actions mixed with significant rolling and yaw motions.

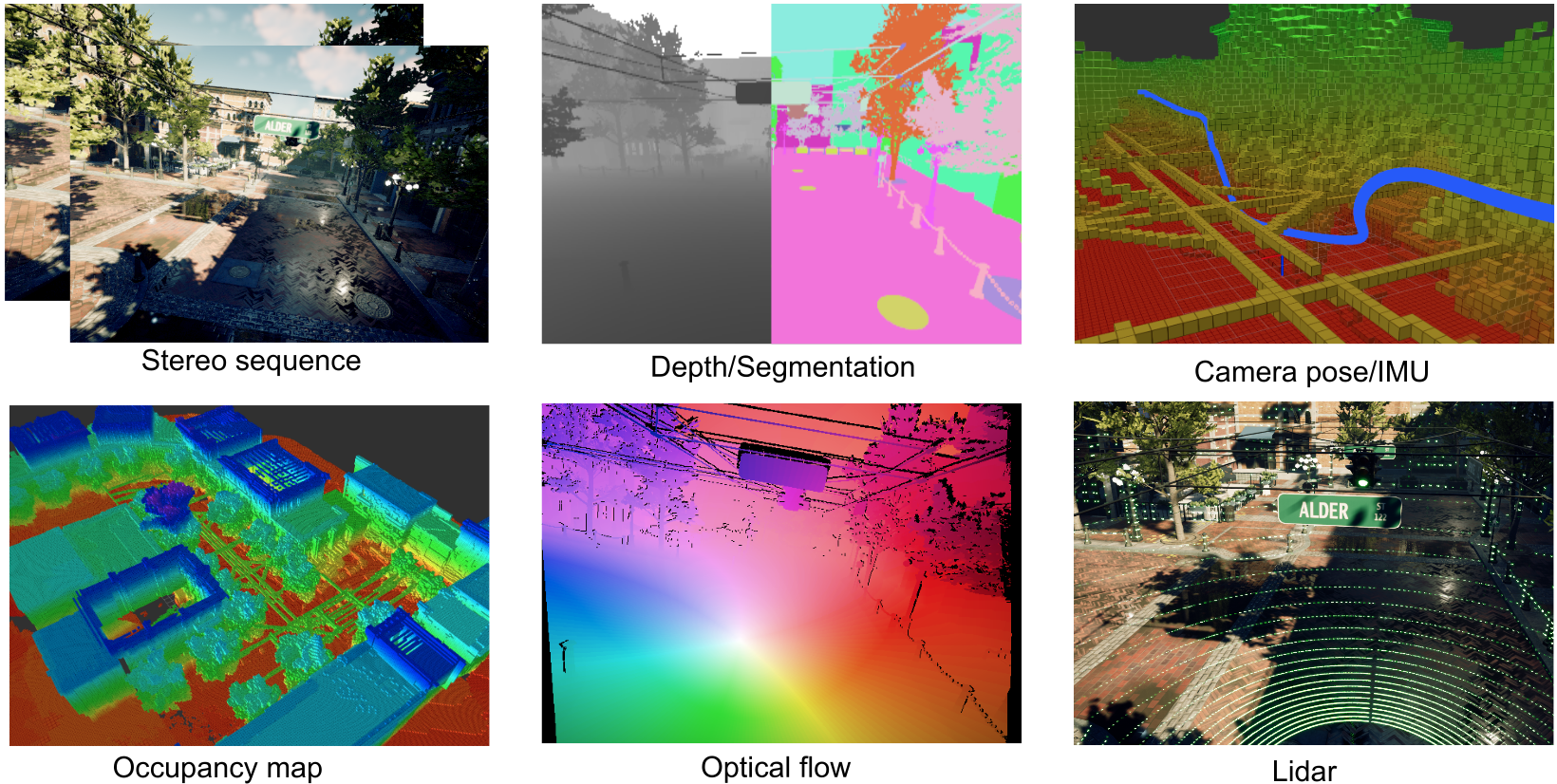

Multimodal ground truth labels

By unleashing the power of the Unreal Engine and AirSim, we can extract various types of ground truth labels including depth, semantic segmentation tag, and camera pose. From the extracted raw data, we further compute other ground truth labels such as optical flow, stereo disparity, simulated multi-line LiDAR points, and simulated IMU readings.

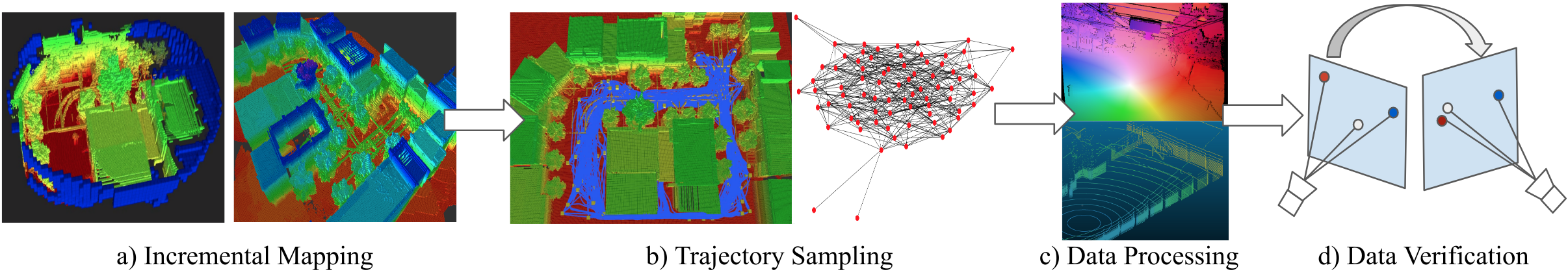

Data acquisition pipeline

We develop a highly automated pipe-line to facilitate data acquisition. For each environment, we build an occupancy map by incremental mapping. Base on the map, we then sample a bunch of trajectories for the virtual camera to follow. A set of virtual cameras follow the trajectories to capture raw data from Unreal Engine and AirSim. Raw data are processed to generate labels such as optical flow, stereo disparity, simulated LiDAR points, and simualated IMU readings. Data verification. Verify the data synchronization and the accuracy of the derived labels.

Contact

Email tartanair@hotmail.com if you have any questions about the data source. You can also reach out to contributors on the associated [GitHub}(https://github.com/microsoft/AirSim).

Wenshan Wang - (wenshanw [at] andrew [dot] cmu [dot] edu)

Sebastian Scherer - (basti [at] cmu [dot] edu)

Acknowledgments

This work was supported by Office of Naval Research under award number N0014-19-1-2266. We thank Ratnesh Madaan, Guada Casuso, Rashaud Savage and other team members from Microsoft for the technical support!

Term of use

This work is licensed under a Creative Commons Attribution 4.0 International License.