SubT UAV Code Release

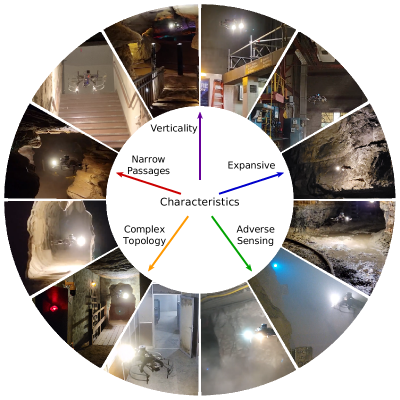

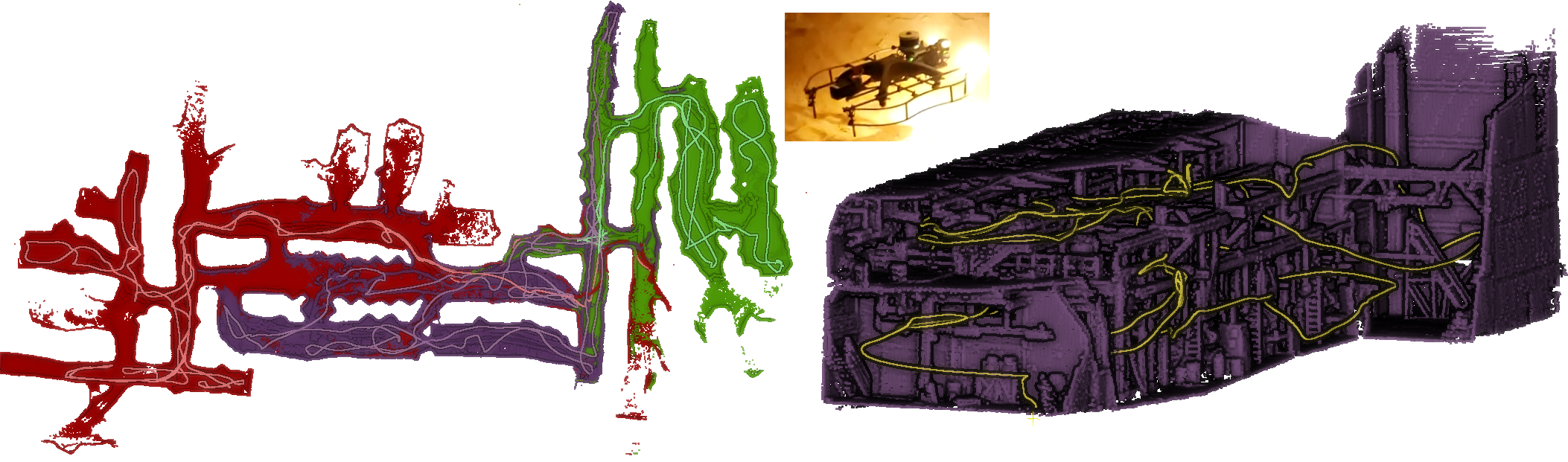

This is the code accompanying the paper Resilient Multi-Sensor Exploration of Multifarious Environments with a Team of Aerial Robots [1]. This paper describes team Explorer’s exploration strategy for a team of UAVs in the DARPA SubT competition. The code should be run on an Ubuntu 18.04 system with ROS melodic and OpenCL installed. The procedure for installing OpenCL depends on which type of GPU your system has. If you have an NVIDIA GPU and have CUDA installed, then you should already have OpenCL. You can check by doing sudo apt install clinfo and running clinfo. If it outputs a number of platforms found higher than 0, then OpenCL is installed.

Building the Code Natively

Download and build the code by running the following:

git clone git@bitbucket.org:castacks/subt_uav.git

cd subt_uav/

./install_dependencies.sh

./build.sh

Running Examples Natively

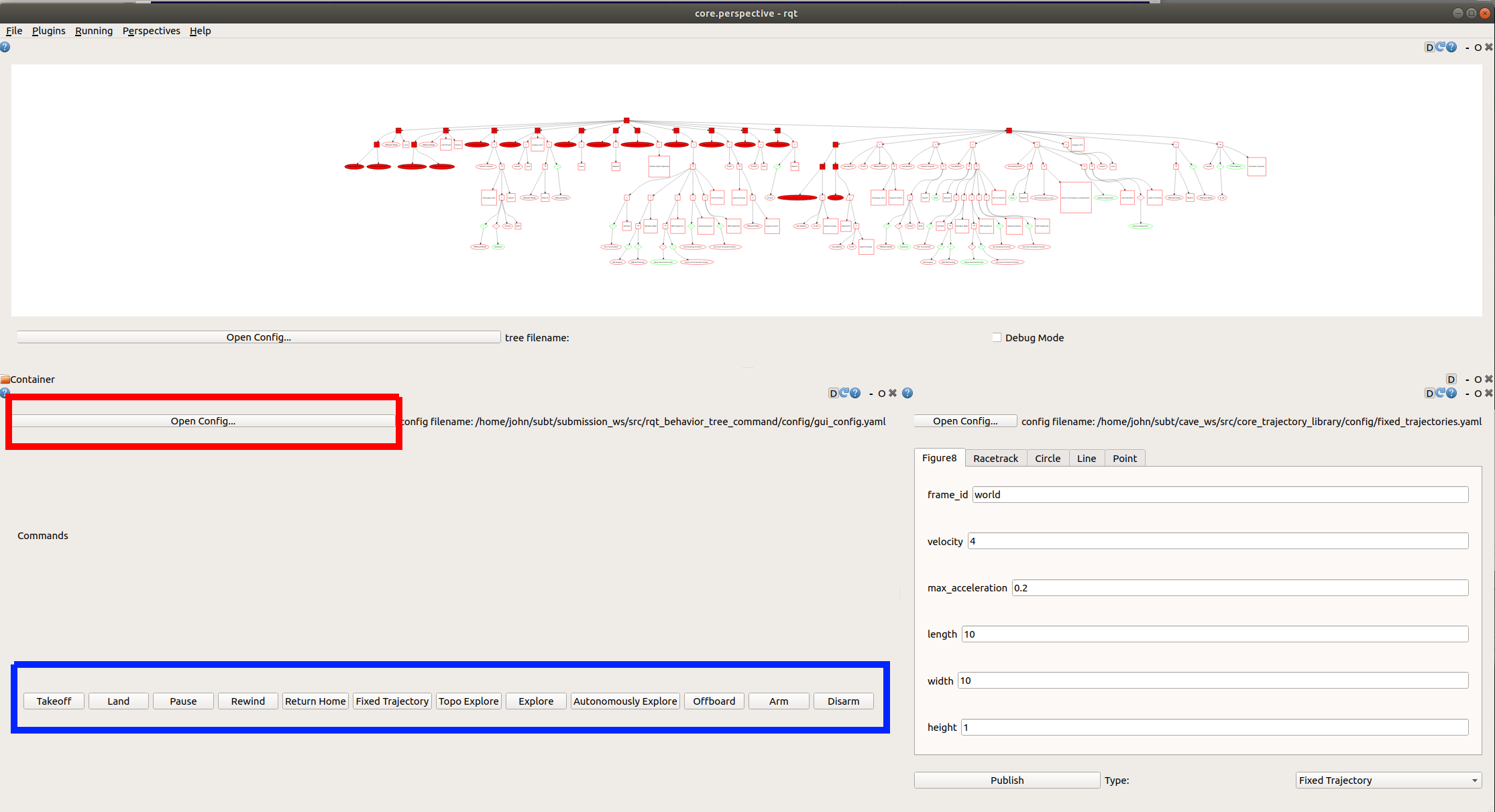

The following commands can be used to launch the UAV in different environments. The first time you launch everything, the gui below will not have the buttons highlighted in blue. To load the buttons, press the Open Config button highlighted in red and select gui.yaml from the open file dialog box. To avoid doing this each time you laucnh the sim, save the perspective using the the Perspectives drop down menu at the top, then selecting Export, and overwriting the core.perspective file.

First run

source devel/setup.bash

To launch the UAV in a small room run:

mon launch core_central sim_main.launch world:=~/subt/final_ws/src/core_gazebo_sim/worlds/room.world

To launch the UAV in an indoor hallway environment run:

mon launch core_central sim_main.launch world:=~/subt/final_ws/src/core_gazebo_sim/worlds/hawkins_qualification.world

To launch the UAV in an indoor two story buliding run:

mon launch core_central sim_main.launch world:=~/subt/final_ws/src/core_gazebo_sim/worlds/filmmakers2.world

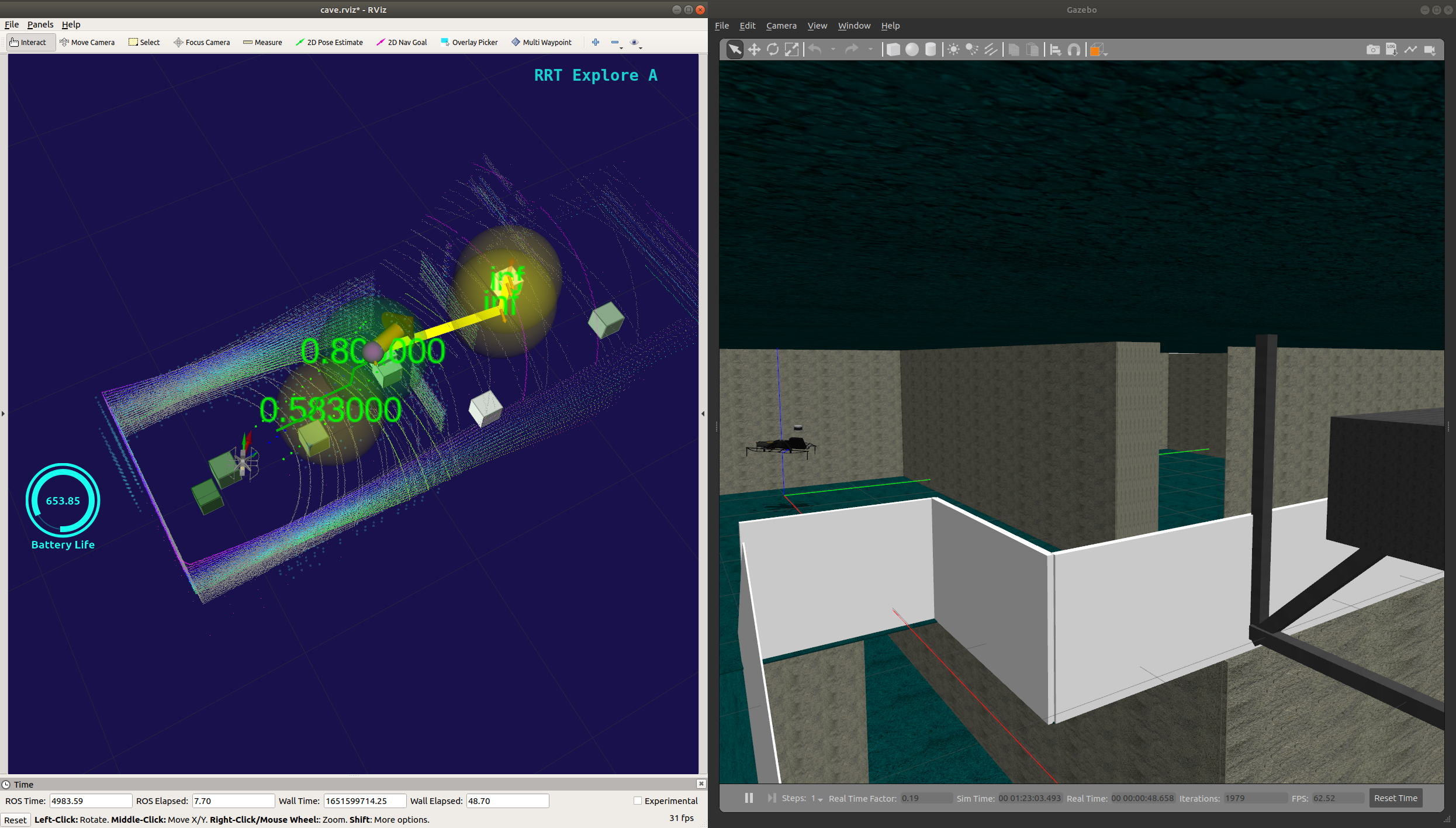

In addition to the gui shown above, rviz and gazebo windows will also launch and should look like the following:

Building the Code in Docker

Run:

cd subt_uav

./docker_build.sh

Running Examples in Docker

Run:

cd subt_uav ./docker_run.sh

Inside docker, enter the workspace and source it:

cd /home/ws

source devel/setup.bash

Run a roscore. This can be done from outside docker.

When you launch the following examples, add buttons to the gui the same way as described at the beginning of the Running Examples Natively section.

To launch the UAV in a small room run:

mon launch core_central sim_main.launch world:=/home/ws/src/core_gazebo_sim/worlds/room.world

To launch the UAV in an indoor hallway environment run:

mon launch core_central sim_main.launch world:=/home/ws/src/core_gazebo_sim/worlds/hawkins_qualification.world

To launch the UAV in an indoor two story buliding run:

mon launch core_central sim_main.launch world:=/home/ws/src/core_gazebo_sim/worlds/filmmakers2.world

[1] G. Best, R. Garg, J. Keller, G. A. Hollinger, S. Scherer. “Resilient Multi-Sensor Exploration of Multifarious Environments with a Team of Aerial Robots”. Proc. Robotics: Science and Systems, 2022