Semantic & Dynamic SLAM

We developed a method for real-time semantic understanding and 3D mapping in both static and dynamic environments, and demonstrate that the two parts can benefit each other. Semantic mapping has been used for many applications such as autonomous robots and AR/VR. However, most existing approaches treat semantic understanding and 3D mapping as separate problems. In addition, dynamic objects are often treated as outliers in the SLAM mapping of current approaches.

In this work, we first propose a consistent semantic 3D mapping system. The grid labels are jointly optimized in 3D space over multiple views instead of treating each frame independently. Secondly, we jointly optimize the camera’s ego-motion and the dynamic objects’ trajectories over time. Multi-view bundle adjustment with new object measurements is proposed to jointly optimize poses of cameras, objects and points.

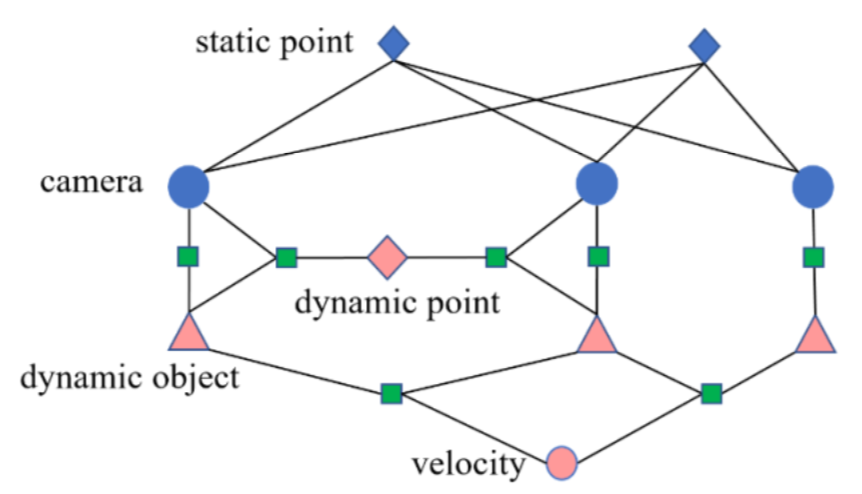

Instead of treating dynamic objects as an outlier, we proposed to track the dynamic object in the scene and estimate the motion model of the dynamic object. By tracking the motion at object level, we can utilize the feature points on dynamic objects. In this method, the camera pose is partially constrained by dynamic points and dynamic objects. The rigid body assumption indicates that a dynamic point’s position on an object doesn’t change over time shown as the red diamonds in Figure 1. This allows us to utilize the standard 3D map point re-projection error to optimize the position shown as the black squares in the graph. The second assumption is that the object motion model is constrained to be physically feasible.

Project Members

- Wenshan Wang

- Chen Wang

- Yafei Hu

- Yuheng Qiu