Unified line segment detection and line based 2D-3D registration/localization

The 2D to 3D registration problem is to match a query image with a 3D model to establish the geometric correspondences between the two modalities and estimate camera pose. Since camera and LiDAR are widely used instruments for scene interpretation and intelligent transportation, the 2D-3D registration is essential for many applications, e.g. point cloud colorization, camera to LiDAR calibration and camera localization. Low-cost LiDAR sensors usually capture geometric data without textured information. However, without textured information, it is difficult to recognize semantic information even for artificial visual interpretation. Based on the estimated transformation parameters, image textures can be used to colorize untextured point clouds, which is beneficial for further interpretation. With the developments of low-cost LiDAR sensors and the advancements in LiDAR-based {Simultaneous Localization and Mapping (SLAM)} algorithms, point cloud 3D models can be easily obtained. Thus 2D-3D registration can be used to localize a small lightweight camera inside pre-built 3D maps, which is attractive and complementary to existing visual SLAM technology.

In this work, we first developed a unified line segment detection method with the Bezier curve line representation and end-end trainable netowrk, which guarantees the robustness of geometric feature extracrtion back-end. Then for the global 2D-3D localization in the manhattan world, we proposed to use geometric constraints between vanishing points and 3D parallel lines to decouple the 6-DoF pose estimation. Finally, we developed a systematic 2D-3D pose tracking framework by iteratively optimize 2D-3D line correspondences and camera pose.

Some of the current contributions are as follows:

Unified line feature detection across pinhole, fisheye and spherical cameras

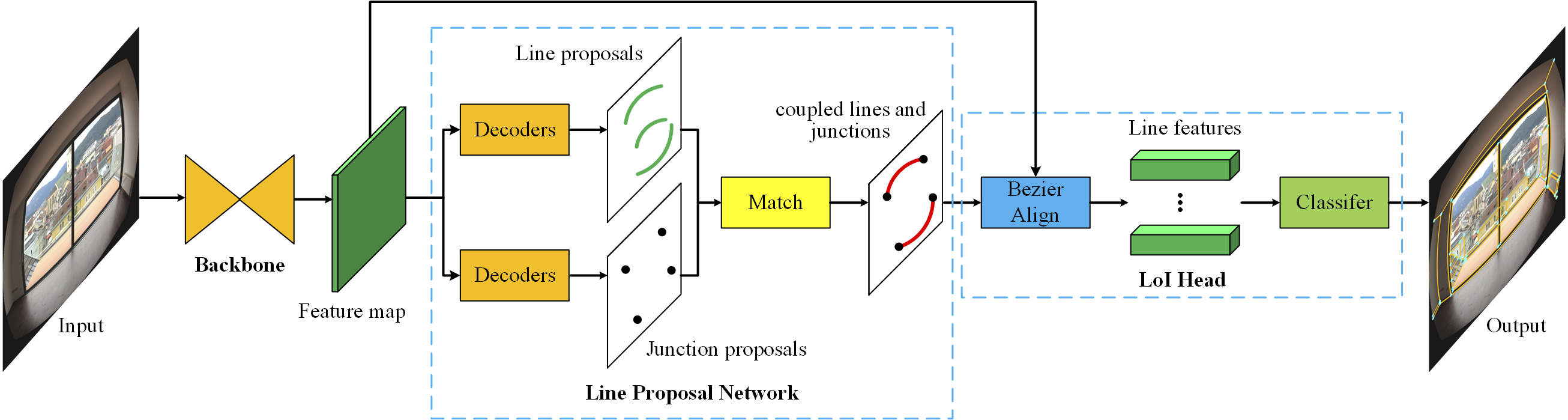

Image line segment detection is a fundamental problem in computer vision and robotics. Although numerous state-of-the-art methods have shown great performance for straight line segment detection, the line segment detection for distorted images without undistortion is still a challenging problem. Besides, there is a lack of a unified line segment detection framework for both distorted and undistorted images. To address these two problems, we propose a novel learning-based Unified Line Segment Detection method (i.e., ULSD) for distorted and undistorted images in this paper. Specifically, we first propose a novel equipartition point-based Bezier curve representation to model arbitrary distorted line segments. Then the line segment detection is tackled by equipartition point regression with an end-to-end trainable neural network. Consequently, the proposed ULSD is independent of camera distortion parameters and does not need any undistortion preprocessing. In the experiments, the proposed method is firstly evaluated on the pinhole, fisheye, and spherical image datasets, respectively, as well as trained and tested on the mixed dataset with different distorted images. The experimental results on each distortion model show that the proposed ULSD is more competitive than the state-of-the-art methods for both accuracy and efficiency, especially for the results of the unified model trained on the mixed datasets, thus demonstrating the effectiveness and generality of the proposed ULSD to real-world scenarios.

Line-based 2D-3D registration and camera localization in Structured environments

Accurate registration of 2D imagery with point clouds is a key technology for image-LiDAR point cloud fusion, camera to laser scanner calibration and camera localization. Despite continuous improvements, automatic registration of 2D images and 3D LiDAR maps without using additional textured information still faces great challenges. In this paper, we propose a new 2D-3D registration method to estimate 2D-3D line feature correspondences and the camera pose in untextured point clouds of structured environments. Specifically, we first use geometric constraints between vanishing points and 3D parallel lines to compute all feasible camera rotations. Then, we utilize a hypothesis testing strategy to estimate the 2D-3D line correspondences and the translation vector. By checking the consistency with computed correspondences, the best rotation matrix can be found. Finally, the camera pose is further refined using non-linear optimization with all the 2D-3D line correspondences. The experimental results demonstrate the effectiveness of the proposed method on the synthetic and real dataset (outdoors and indoors) with repeated structures and rapid depth changes.

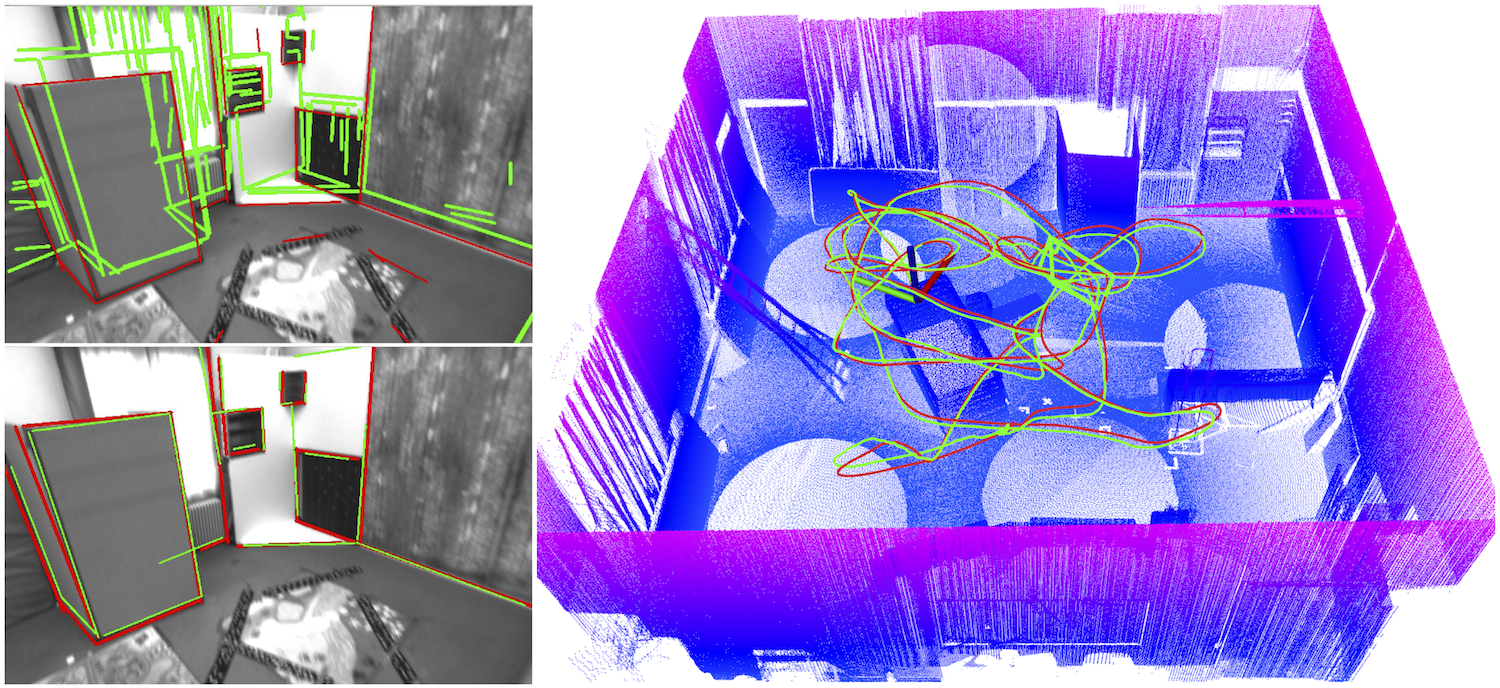

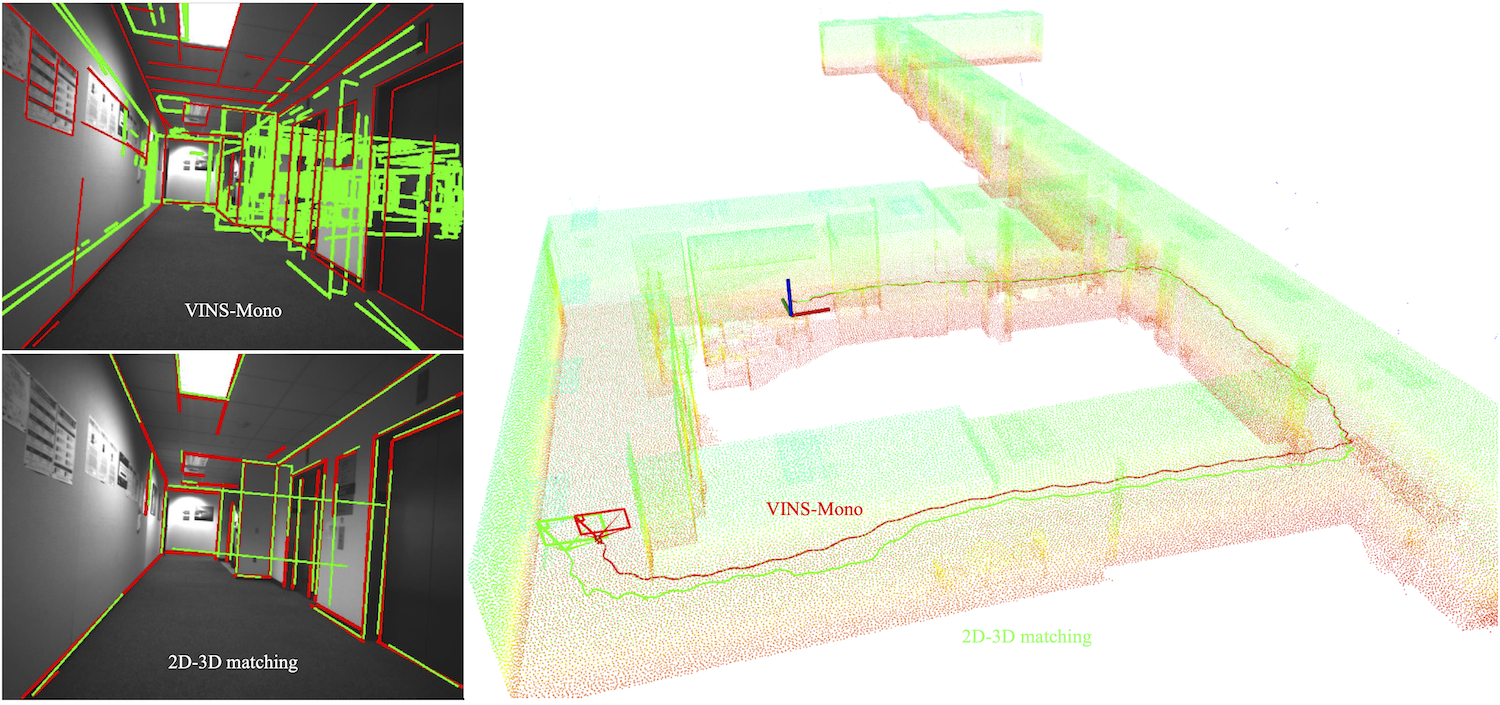

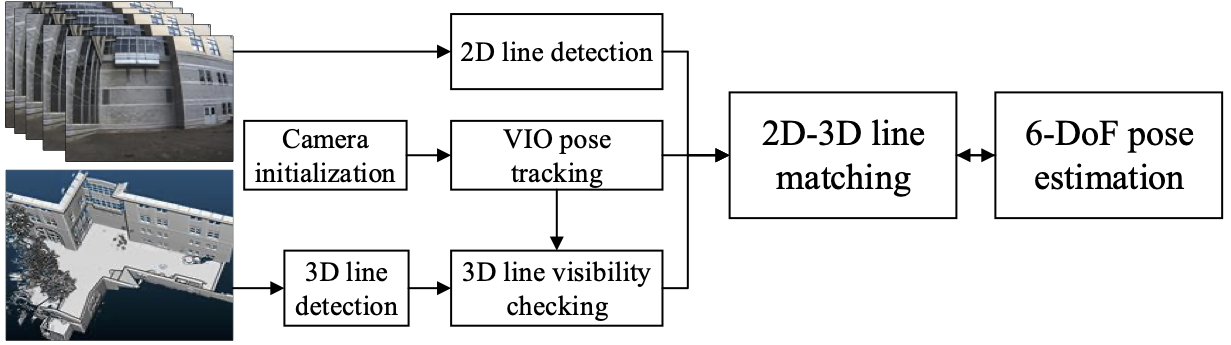

Line based 2D-3D pose tracking

Light-weight camera localization in existing maps is essential for vision-based navigation. Currently, visual and visual-inertial odometry (VO\&VIO) techniques are well-developed for state estimation but with inevitable accumulated drifts and pose jumps upon loop closure. To overcome these problems, we propose an efficient monocular camera localization method in prior LiDAR maps using direct 2D-3D line correspondences. To handle the appearance differences and modality gaps between LiDAR point clouds and images, geometric 3D lines are extracted offline from LiDAR maps while robust 2D lines are extracted online from video sequences. With the pose prediction from VIO, we can efficiently obtain coarse 2D-3D line correspondences. Then the camera poses and 2D-3D correspondences are iteratively optimized by minimizing the projection error of correspondences and rejecting outliers. Experimental results on the EurocMav dataset and our collected dataset demonstrate that the proposed method can efficiently estimate camera poses without accumulated drifts or pose jumps in structured environments.

The 2D-3D pose tracking was tested on a desktop (Intel Core i7-4790K CPU and GTX 980Ti GPU) and a laptop (Intel Core i7-8750H CPU and GTX 1060 GPU). The GPU is only used for 2D line detection. VINS-Mono does not use map information and can be customized set for output frequency. We select the most stable one at 15 Hz. Then for the estimation of 2D-3D correspondences and the camera poses, it costs about 0.01 seconds on average for each keyframe. Since the 3D line extraction is offline before the system starts, 2D line detection can run at 25Hz on 640x480 images, our method can run at about 13~15 Hz for all the scenarios.

Publications

- Hao Li, Huai Yu, Wen Yang, Lei Yu, Sebastian Scherer, “ULSD: Unified Line Segment Detection across Pinhole, Fisheye, and Spherical Cameras”, ISPRS Journal of Photogrammetry and Remote Sensing 178, 2021:187-202.

- Huai Yu, Weikun Zhen, Wen Yang, and Sebastian Scherer, “Line-Based 2-D–3-D Registration and Camera Localization in Structured Environments”, IEEE Transactions on Instrumentation and Measurement 69, no. 11 (2020): 8962-8972.

- Huai Yu, Weikun Zhen, Wen Yang, Ji Zhang, and Sebastian Scherer, “Monocular Camera Localization in Prior LiDAR Maps with 2D-3D Line Correspondences”, International Conference on Intelligent Robots and Systems (IROS), 2020

Contact

- Huai Yu : (huaiy [at] andrew [dot] cmu [dot] edu)

- Sebastian Scherer: (basti [at] cmu [dot] edu)