AirDOS: Dynamic SLAM benefits from Articulated Objects

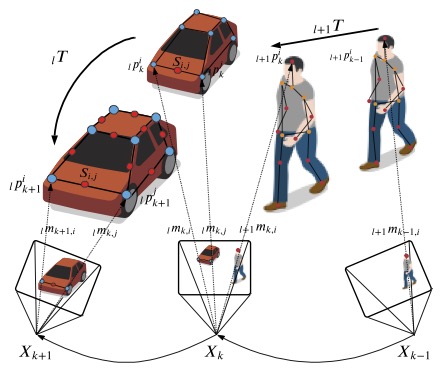

Dynamic Object-aware SLAM (DOS) exploits object-level information to enable robust motion estimation in dynamic environments. It has attracted increasing attention with the recent success of learning-based models. Existing methods mainly focus on identifying and excluding dynamic objects from the optimization. In this paper, we show that feature-based visual SLAM systems can also benefit from the presence of dynamic articulated objects by taking advantage of two observations:

- The 3D structure of an articulated object remains consistent over time;

- The points on the same object follow the same motion.

In particular, we present AirDOS, a dynamic object-aware system that introduces rigidity and motion constraints to model articulated objects. By jointly optimizing the camera pose, object motion, and the object 3D structure, we can rectify the camera pose estimation, preventing tracking lost, and generate 4D spatio-temporal maps for both dynamic objects and static scenes.

Articulated Objects

In this paper, we extend the simple rigid objects to general articulated objects, defined as objects composed of one or more rigid parts (links) connected by joints allowing rotational or translational motion, e.g., vehicles and humans, and utilize the properties of articulated objects to improve the camera pose estimation. Namely, we jointly optimize the 3D structural information and the motion of articulated objects. To this end, we introduce (1) a rigidity constraint, which assumes that the distance between any two points located on the same rigid part remains constant over time, and (2) a motion constraint, which assumes that feature points on the same rigid parts follow the same 3D motion. This allows us to build a 4D spatio-temporal map including both dynamic and static structures.

Video

Publication

Please refer to our Paper and Code for details.

Contact

- Yuheng Qiu <yuhengq [at] andrew [dot] cmu [dot] edu>

- Chen Wang <chenwang [at] dr.com>

- Wenshan Wang <wenshanw [at] andrew [dot] cmu [dot] edu>

- Sebastian Scherer <basti [at] cmu [dot] edu>