Geometry-Informed Distance Candidate Selection for Omnidirectional Stereo Vision

This is the project page of the ICRA submission, “Geometry-Informed Distance Candidate Selection for Adaptive Lightweight Omnidirectional Stereo Vision with Fisheye Images”. For code, dataset, and the pre-trained models, please refer to the GitHub page.

Overview

Depth perception is essential for mobile robots in navigation and obstacle avoidance. LiDAR devices are commonly used due to their accuracy and speed, but they have mechanical complexities and can be costly. Current multiview stereo (MVS) vision methods with fisheye images are either not robust enough or cannot run in real-time. Many learning-based methods are very computationally-expensive due to the cost volume approach.

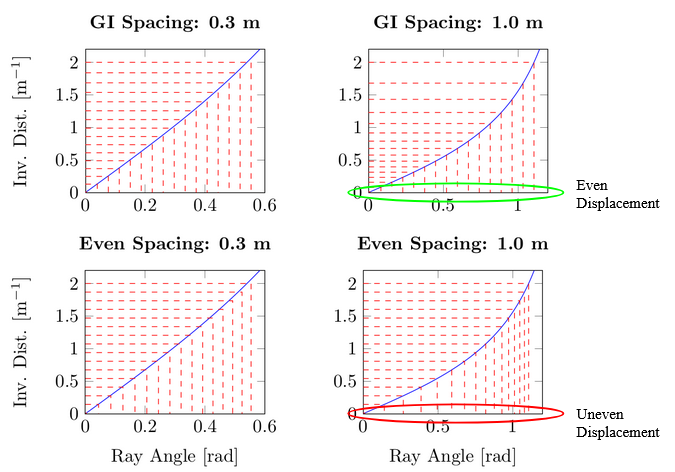

The study introduces Geometry-Informed (GI) distance candidates which can be integrated into many omnidirectional MVS models that use a fixed number of distance candidates. GI candidates aim to improve depth estimation accuracy and decrease the inference time by reducing the number of candidates needed.

Additionally, GI candidates convey an interesting property onto MVS models. The GI candidate distribution is dependent on baseline distance. So as the baseline distance between reference and query cameras changes, so does the GI candidates chosen. We show that this dependency allows camera locations can be adjusted post-training and the model still can perform well by adjusting the GI candidates to the new distribution.

100k-Samples Multiview Stereo Vision Dataset

The dataset consists of 112,344 samples collected from 68 environments. Each sample consists of RGB fisheye images and ground truth depth for each of the three cameras in our specific configuration. Our configuration uses an evaluation board with three fisheye cameras in a triangular configuration. Additionally, each sample has a ground truth RGB and distance map panorama at the reference camera’s location.

The dataset will be released soon on our project github page.

More Results

Results show a comparison of synthetically-generated images from unknown environments. The Geometry-Informed (GI) candidates distribution improves depth candidate selection. Using GI candidates leads to:

- Better accuracy in distance estimation

- Faster model performance

An additional benefit of the GI candidates is their ability to accommodate changes in the distance between cameras after training without re-training.

The following table presents performance metrics across various models:

| Model | Candidate Type | Number | MAE | RMSE | SSIM | Time (ms) | GPU Start (MB) | GPU Peak (MB) |

|---|---|---|---|---|---|---|---|---|

| RTSS[1] | EV | 32 | 0.053 | 0.101 | 0.776 | 144 | 330 | 4240 |

| E8 | EV | 8 | 0.013 | 0.032 | 0.862 | 65 | 790 | 1030 |

| G8 | GI | 8 | 0.012 | 0.029 | 0.867 | 65 | 790 | 1030 |

| E16 | EV | 16 | 0.011 | 0.028 | 0.876 | 111 | 790 | 1230 |

| G16 | GI | 16 | 0.010 | 0.028 | 0.877 | 111 | 790 | 1230 |

| G16V | GI | 16 | 0.013 | 0.029 | 0.861 | 111 | 790 | 1230 |

| G16VV | GI | 16 | 0.012 | 0.028 | 0.872 | 114 | 800 | 1090 |

Pre-trained models will be released soon on our github project page.

Manuscript

Please refer to this link.

@misc{hu2021orstereo,

title={Geometry-Informed Distance Candidate Selection for Adaptive Lightweight Omnidirectional Stereo Vision with Fisheye Images},

author={Conner Pulling and Je Hon Tan and Yaoyu Hu and Sebastian Scherer},

year={2023},

primaryClass={cs.CV}

}

Contact

- Conner Pulling: (cpulling [at] andrew [dot] cmu [dot] edu)

- Yaoyu Hu: (yaoyuh [at] andrew [dot] cmu [dot] edu)

- Sebastian Scherer: (basti [at] cmu [dot] edu)

Acknowledgments

This work was supported by Singapore’s Defence Science and Technology Agency (DSTA).