Autonomous UAV-based Multi-Model High-Resolution Reconstruction for Aging Bridge Inspection (new)

During a 4-year research collaboration with an international corporation in civil engineering (Shimizu Institute of Technology), people in the AirLab built several specialized sensor components and robots to explore the possibilities of applying our knowledge and skills to the commonwealth of the general public.

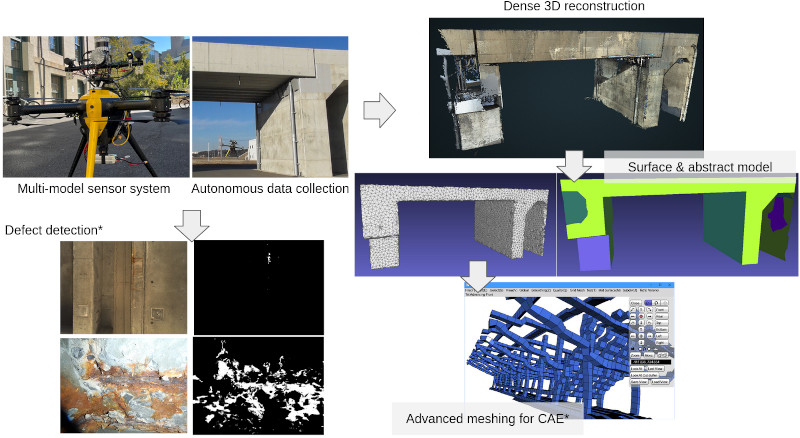

With the target of enabling automated infrastructure inspection for structures such as buildings and bridges, we developed a series of sensor payload and drone systems that are able to automatically collect multi-model data, with an offline reconstruction system that utilizes the data collected to reconstruct the dense 3D model of the structure with geometric details and colored textures. The reconstructed data can be utilized for inspection purposes such as surface defect detection and quantification. They can also be further processed into 3D geometries that are suitable for scientific and engineering computation and analysis, e.g. structural safety analysis.

*: Collaborations with the KLab and .CerLab. at Carnegie Mellon University.

To fulfill the infrastructure inspection requirements, the sensor components and the robot platform need to deliver some important features:

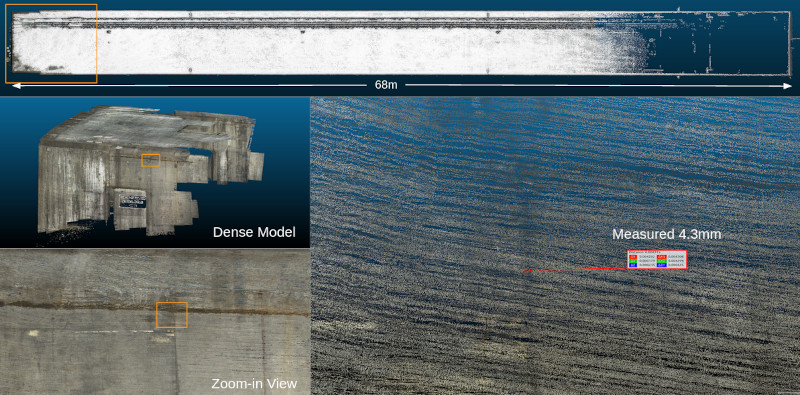

- Sub-millimeter 3D reconstruction for better defect quantification.

- Computer-aided inspection capability for working with human inspectors.

- Automatic defect detection in images.

- Reconstruction of the inspected target for computer-aided engineering such as Finite Element Method (FEM) computations of structural safety analysis.

There are several challenges we have addressed:

- Extremely dense 3D reconstruction.

- Stereo Structure-from-Motion (SfM).

- High-resolution binocular stereo vision.

- Robust robot state estimation.

- Abstract mapping for lightweight localization.

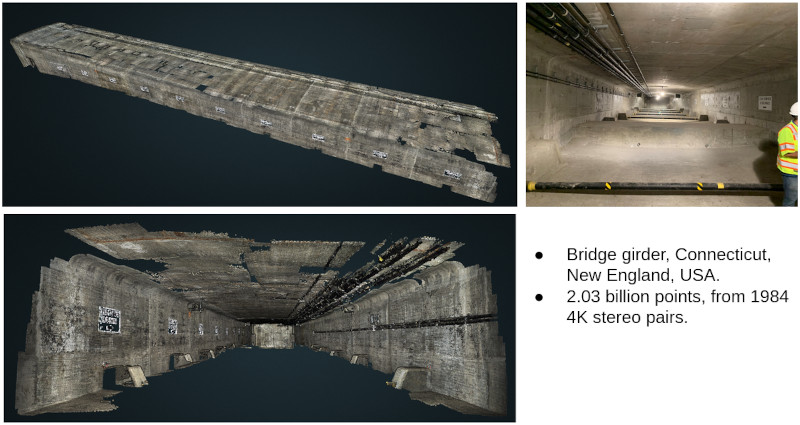

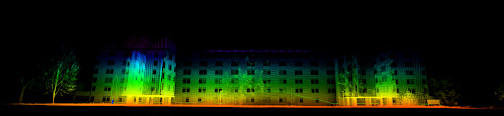

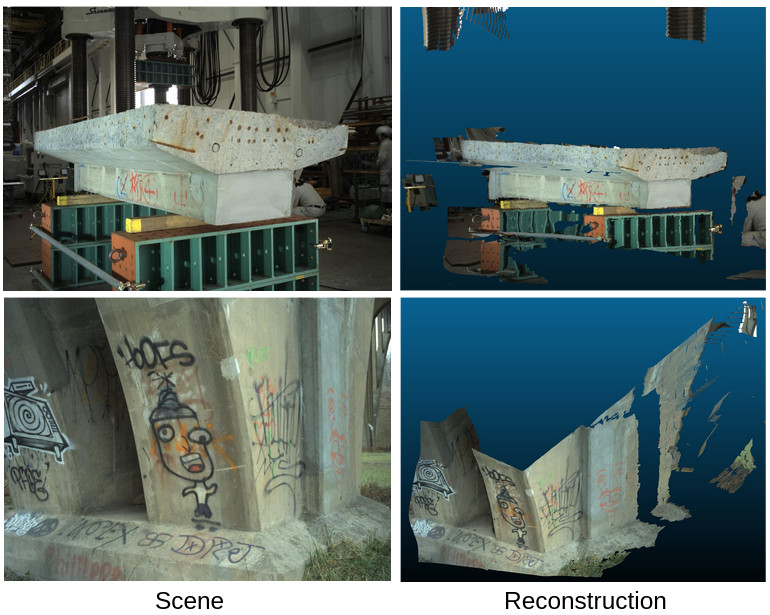

Some highlights of our reconstruction results from real-world data.

In the course of resolving the challenges and fulfilling the research objectives, the team in the AirLab built a series of hardware and software.

Sensors & Robots

Hardware at a glance

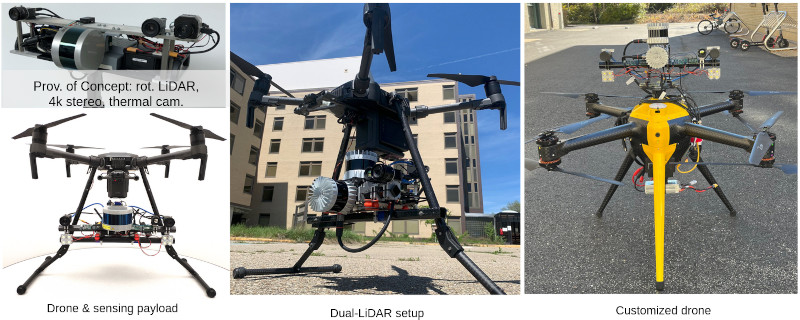

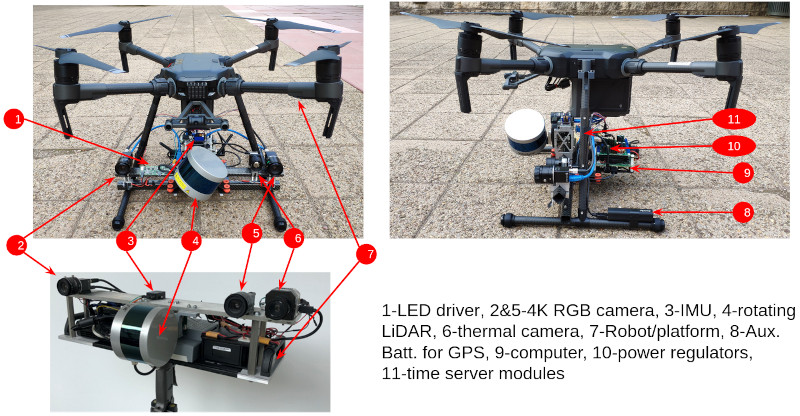

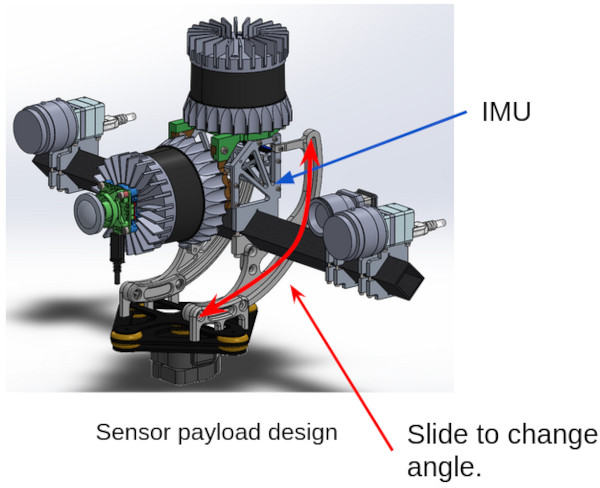

The sensor components are featured as the following. It is a multi-modal sensor payload with the capability of real-time SLAM and high-resolution imaging.

Software created for working with the sensors and robots

For our specialized sensor payloads, we have developed many pieces of software to effectively utilize them.

Customized time serving and time synchronization. A customized solution for easy synchronizing multiple sensors and the computer.

Camera driver for binocular stereo camera. Hardware time synchronization and external hardware triggers for the stereo camera. Custom exposure control for better consistency between the two cameras of the stereo camera.

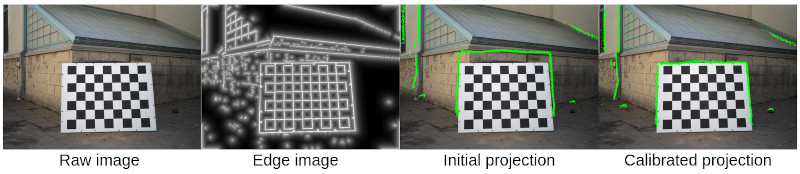

LiDAR-camera extrinsic calibration.

Stereo camera calibration. Intrinsic and extrinsic calibration of the stereo camera.

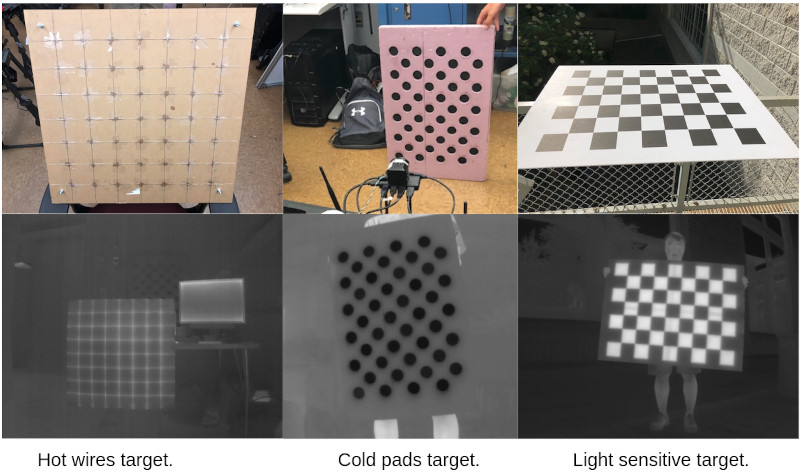

Thermal camera calibration. We designed several thermal targets for calibrating the intrinsics of the thermal camera.

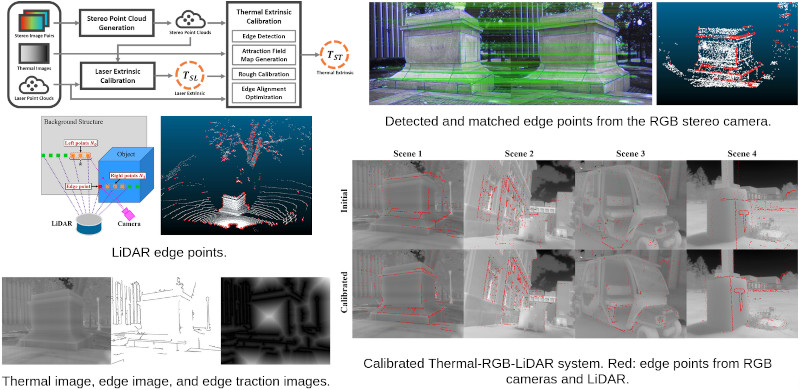

Thermal-RGB-LiDAR calibration. Joint calibration for the extrinsics among thermal camera, RGB camera, and LiDAR.

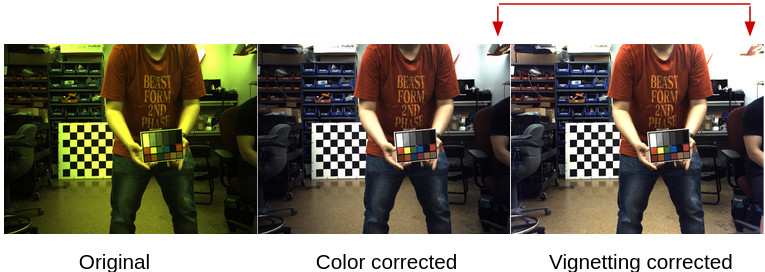

Automatic color correction. Automatically detect the color target using a deep-learning method. Automatically locate the target color block and correct the image color. Vignetting correction with multiple frames of detection.

IMU orientation calibration. Detected procedure for calibrating the rotated angle of the IMU once the payload changes its configuration.

Autonomy

Dual-LiDAR-IMU real-time state estimation.

Full-stack autonomy software. A full-stack autonomy software developed in the AirLab has been deployed on our drone. The autonomy software implements the robot state machine, global and local trajectory planning, and robot control.

Computer-aid inspection route planning. A simple GUI for the human inspector to design and manage inspection routes.

Data processing

Due to the sheer amount of data we need to handle, many of the data processing procedures are facilitated by automatic scripts and run on remote servers.

Data extraction and pre-processing. Images and point clouds are extracted from a large amount of raw data and preprocessed by leveraging the multi-core structure of the remote server. Parallel computing is applied whenever we can.

Large-scale 3D reconstruction. We have a set of dedicated programs and scripts to process the collected data and perform large-scale 3D reconstruction on HPCs. Similar to pre-processing, we try to leverage the multi-core architectures on the remote server to accelerate the process. This allows us to do reconstructions with billions of 3D points.

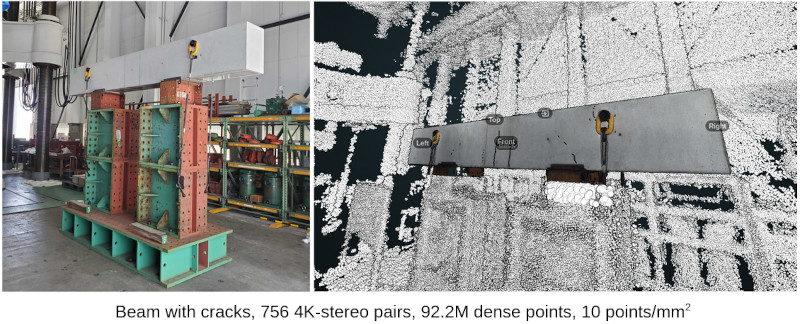

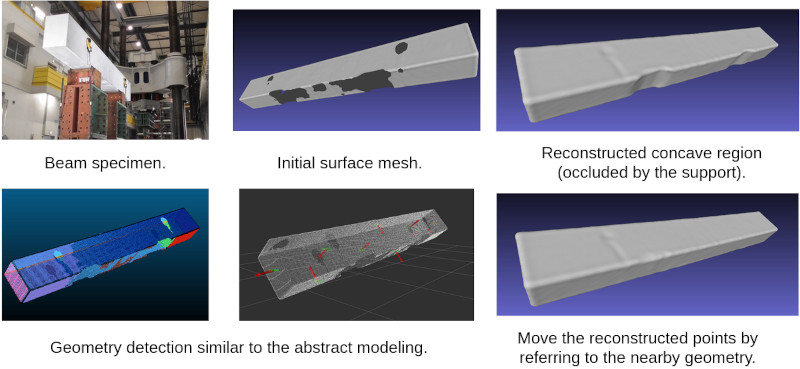

Meshing. Customized program for automatically converting a point cloud to a surface mesh. Automatically, fill the holes in the surface mesh. The following is an example where we scanned a concrete beam specimen and reconstructed a dense point cloud of it. Then a surface mesh is generated with hole-filling.

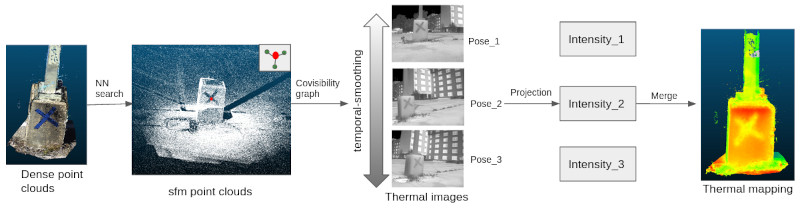

Thermal-mapping. With the reconstructed dense 3D point cloud based on RGB images, we can project the thermal image to the point cloud. Special treatments are applied to smooth the intensity values of the thermal images to have temporal-consistent thermal pixel values.

Research

Apart from the engineering efforts, we also identified several research topics and pushed the relevant state-of-the-art toward more accurate, efficient, and robust algorithms.

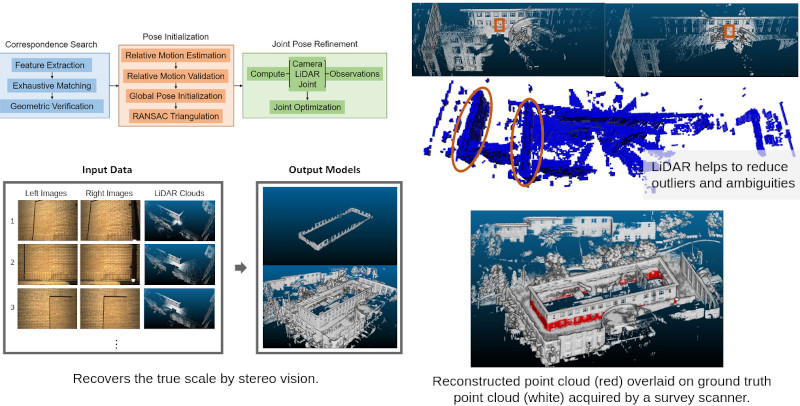

Stereo & LiDAR-enhanced SfM

We developed new algorithms by introducing stereo image constrain and LiDAR information to Structure-from-Motion (SfM). By doing this, the SfM becomes much more robust to noise and mismatches and the reconstructed point clouds have the correct scale.

Related work: Estimating the Localizability of Tunnel-like Environments using LiDAR and UWB.

High-resolution binocular stereo vision

Perform reconstruction on a single pair of 4K-resolution stereo images. A single stereo pair results in ~12M reconstructed points that allow us to preserve as much detail as possible. Deep-learning methods are utilized.

Line-based 2D-3D localization

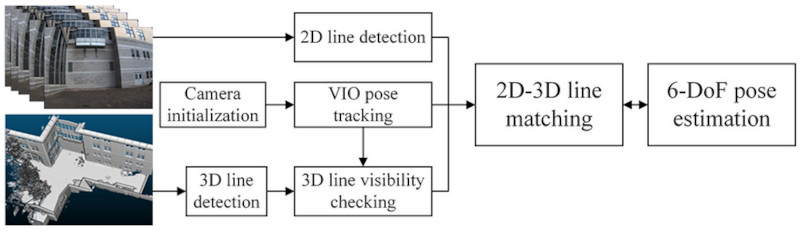

Exploit that line features in the inspected scenes to do better localization against a pre-built map. Can also be used to aid real-time visual odometry for more accurate and robust performance.

Line detection.

Visual odometry and localization by line matching.

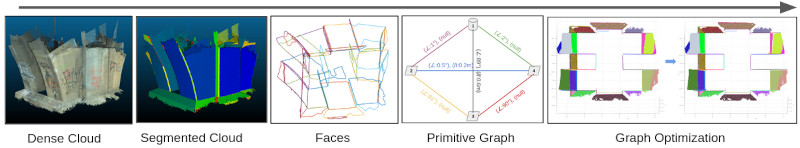

Abstract mapping

Turn a heavy point cloud map into a lightweight abstract map represented by primitive geometries such as second-order surfaces (quadrics). Utilize the abstract map to do faster localization.

Publications

- Unified Representation of Geometric Primitives for Graph-SLAM Optimization Using Decomposed Quadrics. By Zhen, W., Yu, H., Hu, Y. and Scherer, S. In 2022 International Conference on Robotics and Automation (ICRA), 2022.

- ORStereo: Occlusion-Aware Recurrent Stereo Matching for 4K-Resolution Images. By Hu, Y., Wang, W., Yu, H., Zhen, W. and Scherer, S. In 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 5671-5678, 2021.

- ULSD: Unified Line Segment Detection across Pinhole, Fisheye, and Spherical Cameras. By Li, H., Yu, H., Yang, W., Yu, L. and Scherer, S. In ISPRS Journal of Photogrammetry and Remote Sensing, vol. 178, pp. 187–202, 2021.

- Deep-Learning Assisted High-Resolution Binocular Stereo Depth Reconstruction. By Hu, Y., Zhen, W. and Scherer, S. In 2020 IEEE International Conference on Robotics and Automation (ICRA), pp. 8637–8643, , 2020.

- LiDAR-enhanced Structure-from-Motion. By Zhen, W., Hu, Y., Yu, H. and Scherer, S. In 2020 IEEE International Conference on Robotics and Automation (ICRA), , pp. 6773–6779, , 2020.

- Line-Based 2D–3D Registration and Camera Localization in Structured Environments. By Yu, H., Zhen, W., Yang, W. and Scherer, S. In IEEE Transactions on Instrumentation and Measurement, vol. 69, no. 11, pp. 8962–8972, Jul. 2020.

- Monocular Camera Localization in Prior LiDAR Maps with 2D-3D Line Correspondences. By Yu, H., Zhen, W., Yang, W., Zhang, J. and Scherer, S. In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020.

- A Joint Optimization Approach of LiDAR-Camera Fusion for Accurate Dense 3-D Reconstructions. By Zhen, W., Hu, Y., Liu, J. and Scherer, S. In IEEE Robotics and Automation Letters, vol. 4, no. 4, pp. 3585–3592, Oct. 2019.

- A Unified 3D Mapping Framework Using a 3D or 2D LiDAR. By Zhen, W. and Scherer, S. In International Symposium on Experimental Robotics, pp. 702–711, 2018.

- Achieving Robust Localization in Geometrically Degenerated Tunnels. By Zhen, W. and Scherer, S. In Workshop on Challenges and Opportunities for Resilient Collective Intelligence in Subterranean Environments, Pittsburgh, Pa, 2018.

- Robust localization and localizability estimation with a rotating laser scanner. By Zhen, W., Zeng, S. and Scherer, S. In Proceedings - IEEE International Conference on Robotics and Automation, Singapore, Singapore, pp. 6240–6245, 2017.

Contributors

Long term

Short term. Thank you so much for your help! (Alphabetical order)

- Andrew Saba

- Chenxi Ji, Intern from Tsinghua University, China

- Henry (Hengrui) Zhang

- Jingfeng Liu

- John Keller

- Longwen Zhang, Intern from ShanghaiTech University, China

- Punit Bhatt, MSCV at CMU

- Sam Zeng

- Weyne (Ruixuan) Liu, Intern at CMU